This week we finally reached GA status for VMWare vFabric SQLFire - a memory-optimized distributed SQL database delivering dynamic scalability and high performance for data-intensive modern applications.

In this post, I will highlight some important elements in our design and draw out some of our core values.

The current breed of popular NoSQL stores promote different approaches to data modelling, storage architectures and consistency models to solve the scalability and performance problems in relational databases. The overarching messages in all of them seems to suggest that the core of the problem with traditional relational databases is SQL.

But, ironically, the core of the scalability problem has little to do with SQL itself - it is the manner in which the traditional DB manages disk buffers, manages its locks and latches through a centralized architecture to preserve strict ACID properties that represents a challenge. Here is a slide from research at MIT and Brown university on where the time is spent in OLTP databases.

Design center

With SQLFire we change the design center in a few interesting ways:

1) Optimize for main memory: we assume memory is abundant across a cluster of servers and optimize the design through highly concurrent data structures all resident in memory. The design is not concerned with buffering contiguous disk blocks in memory but rather manages application rows in memory hashmaps in a form so it can be directly consumed by clients. Changes are synchronously propagated to redundants in the cluster for HA.

2) Rethink ACID transactions: There is no support for strict serializable transactions but assume that most applications can get by with simpler "read committed" and "repeatable read" semantics. Instead of worrying about "read ahead" transaction logs on disk, all transactional state resides in distributed memory and uses a non-2PC commit algorithm optimized for small duration, non-overlapping transactions. The central theme is to avoid any single points of contentions like with a distribtued lock service. See some details here.

3) "Partition aware DB design": Almost every single high scale DB solution offers a way to linearly scale by hashing keys to a set of partitions. But, how do you make SQL queries and DML scale when they involve joins or complex conditions? Given that distributed joins inherently don't scale we promote the idea that the designer should think about common data access patterns and choose the partitioning strategy accordingly. To make things relatively simple for the designer, we extended the DDL (Data definition language in SQL) so the designer can specify how related data should be colocated ( for instance 'create table Orders (...) colocate with Customer' tells us that the order records for a customer should always be colocated onto the same partition). The colocation now makes join processing and query optimization a local partition problem (avoids large transfers of intermediate data sets). The design assumes classic OLTP workload patterns where vast majority of individual requests can be pruned to a few nodes and that the concurrent workload from all users is spread across the entire data set (and, hence across all the partitions). Look here for some details.

4) Shared nothing logs on disk: Disk stores are merely "append only" logs and designed so that application writes are never exposed to the disk seek latencies. Writes are synchronously streamed to disk on all replicas. A lot of the disk store design looks similar to other NoSQL systems - rolling logs, background/offline compression, memory tables pointing to disk offsets, etc. But, the one aspect that represents core IP is all around managing consistent copies on disk in the face of failures. Given that distributed members can come and go, how do we make sure that the disk state a member is working with is the one I should be working with? I cover our "shared nothing disk architecture" in lot more detail here.

5) Parallelize data access and application behavior: We extend the classic stored procedure model by allowing applications to parallelize the procedure across the cluster or just a subset of nodes by hinting the data the procedure is dependent on. This applicaton hinting is done by supplying a "where clause" that is used to determine where to route and parallelize the execution. Unlike traditional databases, procedures can be arbitrary application Java code (you can infact embed the cluster members in your Spring container) and run collocated with the data. Yes, literally in the same process space where the data is stored. Controversial, yes, but, now your application code can do a scan as efficiently as the database engine.

6) Dynamic rebalancing of data and behavior: This is the act of figuring out what data buckets should be migrated when new capacity (cluster size grows) is allocated (or removed) and how to do this without causing consistency issues or introducing contention points for concurrent readers and writes. Here is the patent that describes some aspects of the design.

Embedded or a client-server topology

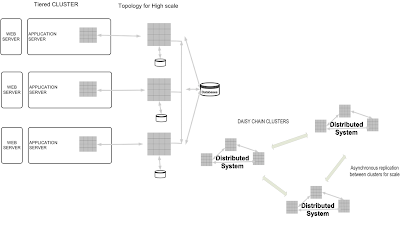

SQLFire supports switching from the classic client-server (your DB runs in its own processes) topology to embedded mode where the DB cluster and the application cluster is one and the same (for Java apps).

We believe the emdedded model will be very useful in scenarios where the data sets are relatively small. It simplifies deployment concerns and at the same time provides significant boost in performance when replicated tables are in use.

All you do is change the DB URL from

'jdbc:sqlfire://server_Host:port' to 'jdbc:sqlfire:;mcast-port=portNum' and now all your application processes that use the same DB URL will become part of a single distributed system. Essentially, the mcast-port port identifies a broadcast channel for membership gossiping. New servers will automatically join the cluster once authenticated. Any replicated tables will automatically get hosted in the new process and partitioned tables could get rebalanced and share some of the data with the new process. All this is abstracted away from the developer.

As far as the application is concerned, you just create connections and execute SQL like with any other DB.

How well does it perform and scale?

Here are the results of a simple benchmark done internally using commodity (2 CPU) machines showcasing linear scaling with concurrent user load. I will soon augment this with more interesting workload characterization. The details are here.

Comparing SQLFire and GemFire

Here is a high level view into how the two products compare. I hope to add a blog post that provides specific details on the differences and use cases where one might apply better than the other.

SQLFire benefits from the years of commercially deployed production code found in GemFire. SQLFire adds a rich SQL engine with the idea that now folks can manage operational data primarily in memory, partitioned across any number of nodes and with a disk architecture that avoids disk seeks. Note the two offerings, SQLFire and GemFire, are completely unique products and deployed separately

As always, I would love to get your candid feedback (link to our forum). I assure you that trying it out is very simple - just like using Apache Derby or H2.

Get to the download, docs and quickstart all from here. The developer license is perpetual and works on upto 3 server nodes.